Nvidia Rubin Architecture Sets New Benchmark for AI Compute

Slug: nvidia-rubin-architecture-ai-compute

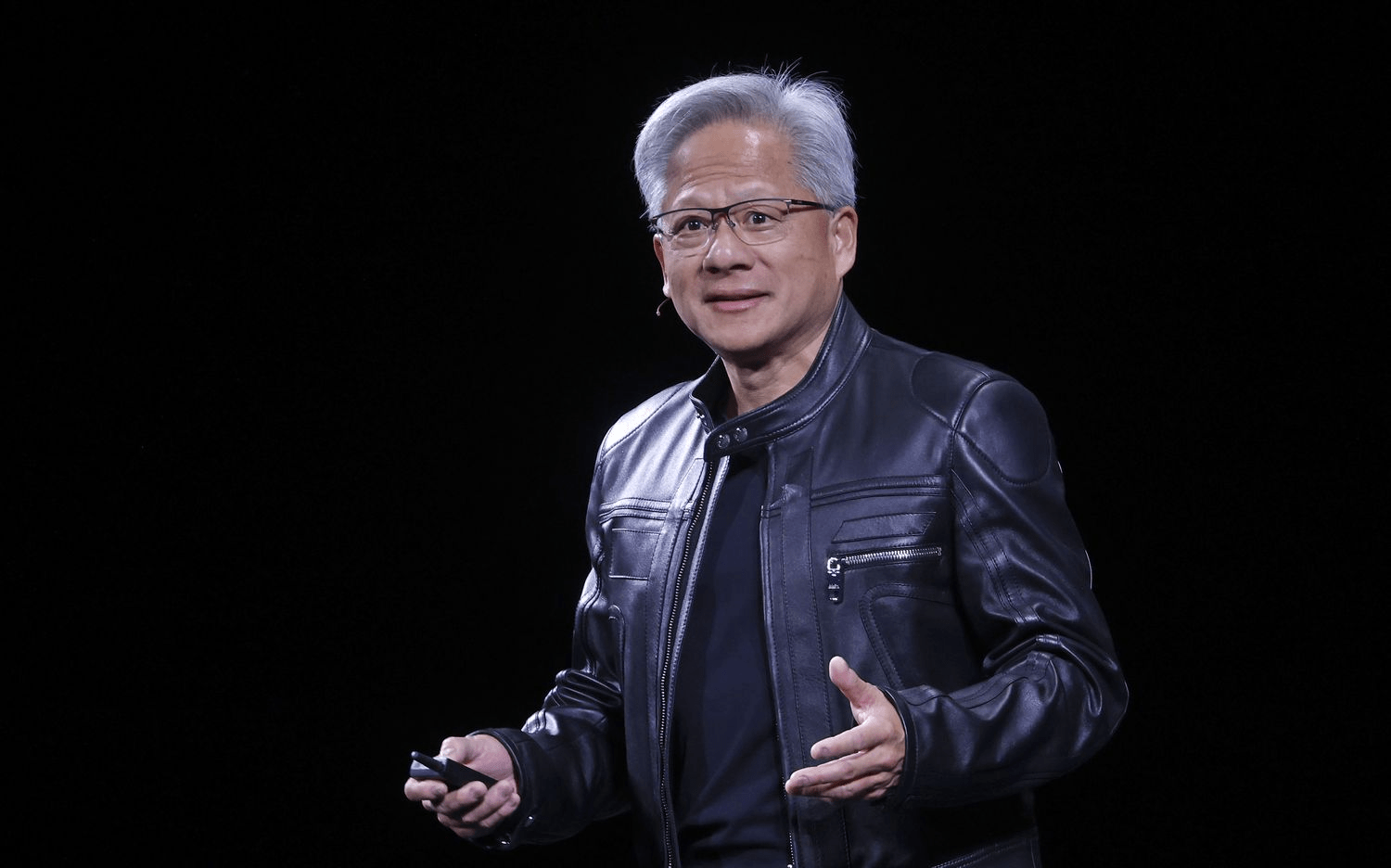

Nvidia Rubin architecture is now in full production, marking a major shift in AI hardware design. Announced at CES 2026, the platform reflects Nvidia’s latest response to surging AI compute demands. According to Nvidia, the architecture is already live and scheduled to scale further in the second half of the year. This launch signals how infrastructure decisions are being reshaped by training and inference workloads that continue to expand in size and complexity.

The Nvidia Rubin architecture replaces Blackwell, continuing a rapid generational cycle that has defined Nvidia’s hardware strategy. As AI models grow more demanding, compute, storage, and interconnect bottlenecks have become strategic constraints rather than technical details. Consequently, Rubin is positioned as a systems-level answer, not just a faster chip.

What the Nvidia Rubin architecture changes in AI infrastructure

The Nvidia Rubin architecture consists of six chips designed to operate together. At its center sits the Rubin GPU, supported by new components that target storage and interconnection limits. Nvidia introduced updates to BlueField and NVLink to address data movement challenges that increasingly affect AI performance.

In addition, the platform includes a new Vera CPU built for agentic reasoning. This design reflects the shift toward longer-running and more autonomous AI workflows. As these workflows grow, memory and cache pressure increase sharply. Nvidia acknowledged this by introducing a new external storage tier connected directly to the compute device.

This architectural shift highlights how AI infrastructure planning now requires broader system thinking. For enterprises navigating similar transitions, advisory platforms like https://uttkrist.com/explore/ help organizations evaluate technology decisions in a global and structured manner.

Performance gains claimed by Nvidia Rubin architecture

Nvidia states that the Rubin architecture delivers three and a half times faster performance than Blackwell on model training tasks. For inference, the gain rises to five times, reaching up to 50 petaflops. At the same time, the platform supports eight times more inference compute per watt.

These claims underline why demand for advanced AI infrastructure remains intense. Speed and energy efficiency now move together as strategic priorities. As a result, infrastructure buyers must balance performance targets with long-term operational constraints.

Understanding these trade-offs increasingly requires external perspective. Many decision-makers rely on ecosystem partners such as https://uttkrist.com/explore/ to assess how emerging architectures align with business objectives across regions and industries.

Cloud adoption and supercomputing use cases

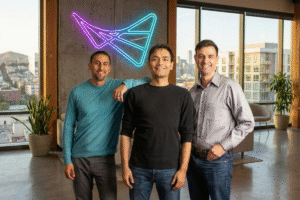

Rubin chips are already planned for deployment across major cloud providers. Nvidia confirmed partnerships involving Anthropic, OpenAI, and Amazon Web Services. Beyond cloud platforms, Rubin systems will power HPE’s Blue Lion supercomputer and the upcoming Doudna supercomputer at Lawrence Berkeley National Lab.

This wide adoption suggests that the Nvidia Rubin architecture is not limited to experimental use. Instead, it is being positioned as a foundational layer for both commercial AI services and large-scale research systems. Consequently, infrastructure investment decisions made today will shape AI capability for years.

As AI spending accelerates, Nvidia’s leadership expects trillions of dollars to flow into infrastructure over the next five years. Navigating this scale of change requires structured insight, where services like https://uttkrist.com/explore/ support informed planning rather than reactive adoption.

Strategic implications of the Nvidia Rubin architecture

The Nvidia Rubin architecture arrives amid intense competition to supply AI infrastructure. Labs and cloud providers are racing to secure capacity while expanding the facilities needed to power it. By addressing compute, storage, and interconnect together, Nvidia is reinforcing its position at the center of this ecosystem.

However, the broader question remains unresolved. As AI systems become more powerful and more expensive to operate, how will organizations prioritize efficiency, access, and long-term value?

Explore Business Solutions from Uttkrist and our Partners’, https://uttkrist.com/explore

https://qlango.com/