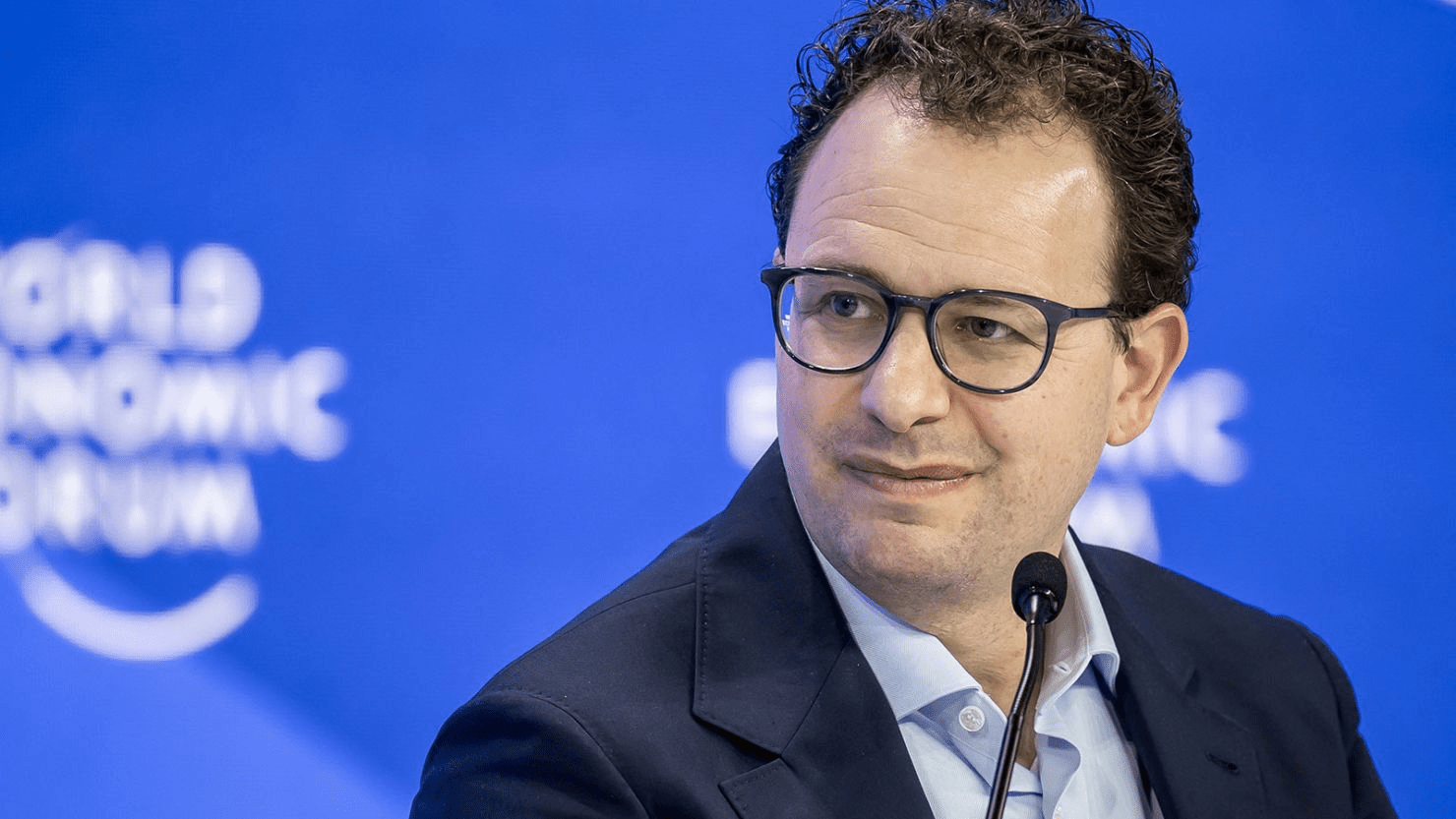

Anthropic CEO Nvidia Criticism Signals a New Phase in the AI Chip Export Debate

The Anthropic CEO Nvidia criticism delivered at the World Economic Forum in Davos reframed an already sensitive policy debate.

Speaking days after U.S. approval of Nvidia’s H200 chip sales to approved Chinese customers, Anthropic’s chief executive challenged both policymakers and chipmakers.

The comments landed harder because Nvidia is not a distant supplier. It is a core partner and investor.

This moment matters because it exposes how existential the AI race now feels to industry leaders.

Export controls are no longer abstract trade tools. They are being framed as national security decisions with irreversible consequences.

Within this context, the Anthropic CEO Nvidia criticism reveals more than disagreement.

It signals a shift in how openly AI executives are willing to confront governments and partners alike.

Anthropic CEO Nvidia criticism and the reversal of U.S. chip export controls

The controversy began after the U.S. administration reversed an earlier restriction.

It officially approved the sale of Nvidia’s H200 chips and an AMD chip line to approved Chinese customers.

These chips are not the most advanced available.

However, they are high-performance processors used for AI workloads.

That fact alone made the decision contentious.

At Davos, Anthropic’s CEO reacted sharply.

He rejected the argument that export restrictions are holding chipmakers back.

He warned the decision would “come back to bite” the United States.

This framing elevates the debate.

It shifts focus from commercial impact to long-term strategic risk.

As a result, the Anthropic CEO Nvidia criticism became a focal point of the forum.

Why the Anthropic CEO sees AI chips as a national security issue

During the interview, the CEO emphasized the U.S. lead in chip-making capabilities.

He argued that shipping these chips would be a major mistake.

He then expanded the argument beyond hardware.

AI models, he said, represent cognition and intelligence at scale.

He described future AI as a “country of geniuses in a data center.”

The analogy was deliberate.

It illustrated why AI compute power has national security implications.

Control over such systems could shape geopolitical power.

From this perspective, chip exports are not routine trade.

They are strategic decisions with lasting consequences.

This is the core logic behind the Anthropic CEO Nvidia criticism.

A nuclear analogy that reshaped the Davos conversation

The most striking moment came next.

The CEO compared the decision to selling nuclear weapons components to North Korea.

The analogy was blunt and unsettling.

It instantly reframed the export decision as reckless rather than pragmatic.

What made the statement extraordinary was context.

Nvidia is not just a supplier.

It provides the GPUs that power Anthropic’s AI models across cloud platforms.

Moreover, Nvidia recently announced an investment of up to $10 billion in Anthropic.

The two companies also described a deep technology partnership.

Against that backdrop, the Anthropic CEO Nvidia criticism sounded like an internal rupture.

It was a rare public clash between strategic partners.

Investor relations, partnerships, and the fading of old constraints

Ordinarily, such rhetoric would trigger immediate damage control.

At Davos, that did not happen.

Anthropic’s position explains why.

The company has raised billions and holds a valuation in the hundreds of billions.

Its Claude coding assistant is widely regarded as a top-tier tool for complex development work.

This strength appears to grant freedom.

The CEO could speak without visible fear of business fallout.

The episode suggests something deeper.

In the AI race, traditional constraints are weakening.

Investor relations, partnerships, and diplomatic language matter less when leaders believe stakes are existential.

That shift may define the next phase of AI governance debates.

Strategic implications for AI leaders and policymakers

The Anthropic CEO Nvidia criticism underscores a growing divide.

Some AI leaders want stricter controls, even at commercial cost.

Others emphasize market access and global competitiveness.

For decision-makers, this tension is unavoidable.

AI development now sits at the intersection of technology, security, and geopolitics.

Organizations navigating this landscape must think structurally.

They must assess dependencies, policy exposure, and long-term risk.

Platforms that analyze such intersections can help leaders frame better decisions.

In this context, many executives are turning to structured insight platforms like https://uttkrist.com/explore/ to examine how policy shifts intersect with technology strategy.

Such services enable informed evaluation without promotional noise.

What this moment reveals about the AI race

Perhaps the most telling detail was not the analogy itself.

It was the confidence behind it.

The CEO spoke as if consequences were secondary to conviction.

That confidence signals how serious the AI race has become in executive minds.

The debate is no longer just about chips.

It is about who controls intelligence at scale.

That reality will shape future policy, partnerships, and public discourse.

As AI leaders grow more vocal, governments will face louder pressure.

The question is whether restraint or acceleration will win.

How should global leaders balance innovation, security, and partnership trust in an era where AI power feels existential?

Explore Business Solutions from Uttkrist and our Partners’, https://uttkrist.com/explore/