Bromism from AI Diet Advice: Rare 19th-Century Disorder Returns

AI Diet Guidance Leads to Bromism

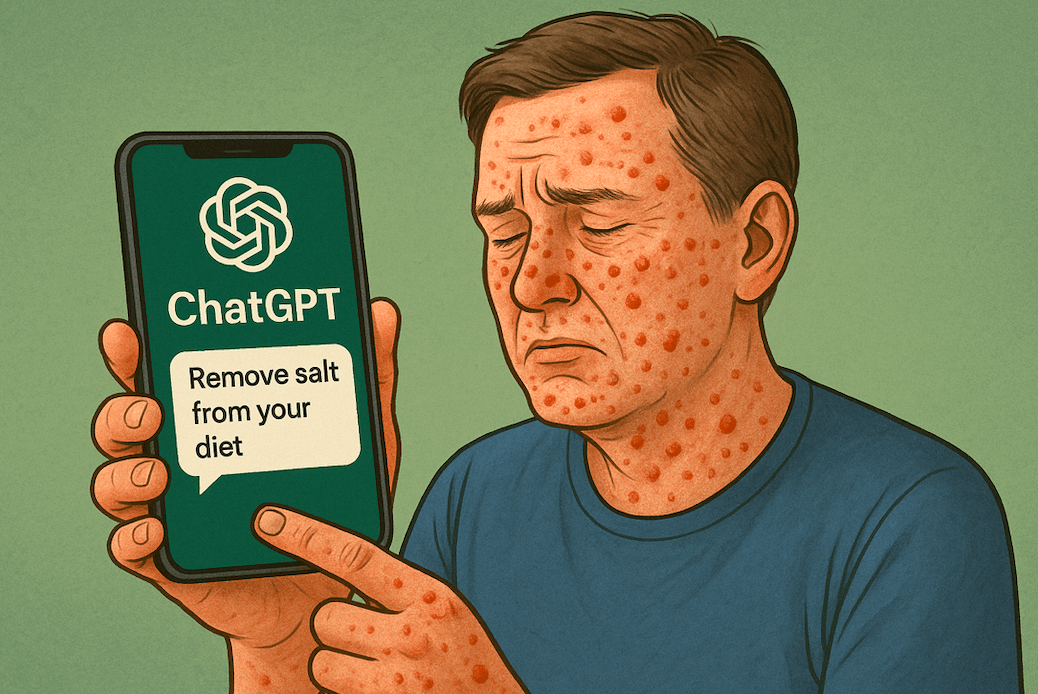

A recent case has highlighted the dangers of relying on artificial intelligence for health guidance without medical supervision. A 60-year-old man developed bromism—a rare 19th-century psychiatric disorder—after following dietary advice from ChatGPT. The man had sought to completely remove salt from his diet due to concerns about sodium chloride’s health effects.

Instead of moderation, he pursued total elimination. When he asked ChatGPT for a substitute, the AI mentioned sodium bromide—a compound unsuitable for human consumption. Over three months, this replacement caused significant health deterioration, culminating in psychiatric hospitalization.

Symptoms and Hospitalization

The patient experienced fatigue, insomnia, excessive thirst, poor coordination, acne, cherry angiomas, and a rash. More alarmingly, he developed paranoia, along with auditory and visual hallucinations. He accused his neighbor of poisoning him and attempted to escape care, which led to an involuntary psychiatric hold.

Upon examination, doctors confirmed bromism—chronic bromide poisoning. Historically, bromism was common in the late 19th and early 20th centuries when bromide was widely used in sedatives and headache powders. The US government restricted its use in 1975 due to toxicity risks.

AI Model Limitations and Context

The case suggests the man used an older ChatGPT model (3.5 or 4.0). Researchers attempting to replicate the responses found that while the AI stated “context matters,” it did not issue a clear health warning or question the dietary intent—critical steps a medical professional would have taken.

This highlights a gap between AI-generated suggestions and professional medical judgment. The absence of real-time verification or follow-up questions allowed a harmful recommendation to be acted upon without safeguards.

Historical and Modern Context of Bromism

Bromism historically resulted from long-term bromide exposure, leading to psychosis, tremors, and even comas. In the past, cases were sometimes mistaken for alcoholism or nervous breakdowns. Modern occurrences are rare, typically arising from industrial exposure or misuse of bromide-containing substances.

This incident marks a rare modern medical case, underscoring both the historical dangers of bromide and the modern risks of misapplied AI advice in health contexts.

Implications for AI Use in Health Guidance

This case emphasizes the need for AI users to approach health-related responses with caution and to cross-check advice with qualified professionals. While AI can provide general knowledge, it cannot replace medical expertise—especially when recommendations involve chemical substances or extreme dietary changes.

What steps should AI platforms take to ensure health advice includes clearer safeguards for users?

Explore Business Solutions from Uttkrist and our Partners’, Pipedrive CRM 2X the usual trial with no CC and no commitments and more uttkrist.com/explore